For the hearing impaired, communicating with others can be a real challenge, and this is especially problematic when it is a deaf parent trying to understand what their child needs, as the child is too young to learn sign language. Mithun Das was able to come up with a novel solution that combines a mobile app, machine learning, and a Neosensory Buzz bracelet to enable this channel of communication.

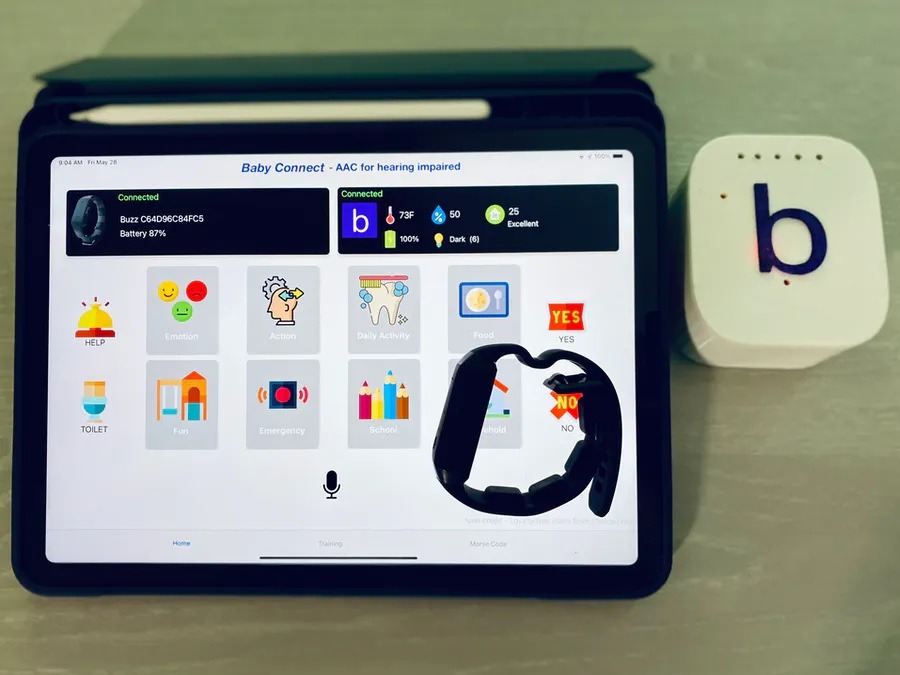

Called the “Baby Connect”, Das’ system involves using a mobile app with a series of images that correspond to various feelings, actions, or wants/needs of a child. When something is requested, such as wanting to take a nap, the action is mapped to a sort of Morse code language that buzzes the four haptic motors on the Neosensory Buzz in a certain pattern. For instance, dislike is mapped to a dot, dash, and then dot, while yes is a single dot.

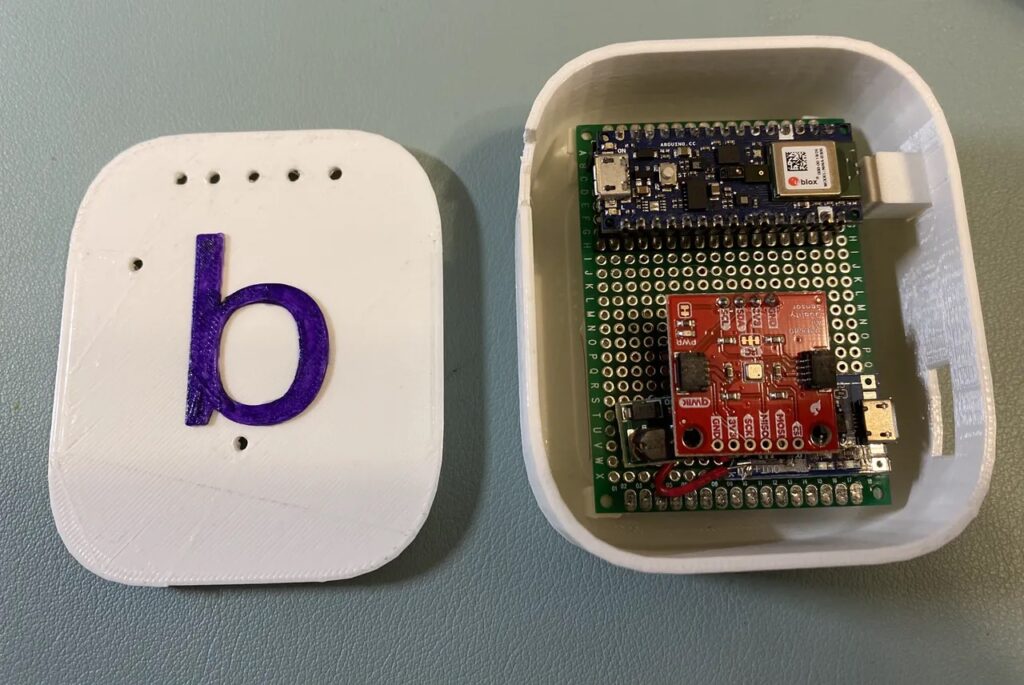

The Baby Connect also has some more advanced features including baby activity monitoring and environmental logging. Because deaf parents are unable to hear the difference between certain cries, the Nano 33 BLE Sense that controls the device runs a model trained with Edge Impulse that can distinguish between cries for pain, hunger, and general malaise. Finally, there’s the ability to use the app as a speech-to-text converter that takes words and changes them automatically into mapped vibrations.

You can read more about this incredible project on Hackster.io.

The post This system uses machine learning and haptic feedback to enable deaf parents to communicate with their kids appeared first on Arduino Blog.

No comments:

Post a Comment